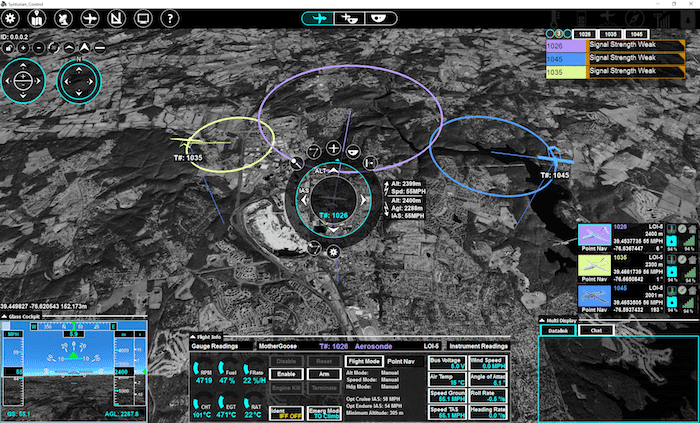

Synturian command, control and collaboration user interface. Photo courtesy of Textron Systems

A new command and control collaboration technology developed by Textron Systems, Synturian, is ready to give military pilots the ability to control manned-unmanned teaming drones with just a few inputs from a cockpit display.

Textron Systems has been developing Synturian for several years and proved its ability to support manned-unmanned aircraft teaming during a July 2018 proof-of-concept demonstration. The demonstration paired Textron Aviation’s Scorpion light attack jet with simulated Nightwarden and Aerosonde drones. During an interview at the Association of the U.S. Army’s annual conference, Justin Kratz, a systems engineer for Textron Systems’ unmanned systems division, said the Synturian has the ability to link any and all unmanned, manned or optionally piloted systems on the battlefield.

“One of the biggest things we’re trying to do is flip the script from technology dictating how the systems are deployed and operated, to allowing operators and commanders to deploy the tech how they deem necessary to run their operations,” said Kratz.

The Scorpion proof-of-concept demonstration was enabled by the installation of Synturian software on the aircraft’s mission computer. That allowed the pilots to perform flight acquisition and control of the simulated unmanned aircraft with inputs on an existing cockpit display. Textron test pilots were able to use Synturian to set up flight route waypoints and change the heading, airspeed and altitude of the two simulated unmanned aircraft.

A cockpit display in the Scorpion jet using Synturian. Photo courtesy of Textron Systems

But according to Kratz, the Future Airborne Capability Environment (FACE) open architecture design of Synturian extends its capability well beyond manned-unmanned aircraft teaming.

That’s because Synturian is a family of systems that has a ground control system and a remote control offering that can populate monitors, tablets and smartphones with its map-centric user interface. It could allow manned and unmanned aircraft pilots to share information on targets with ground vehicles or even submarines and ships.

The open architecture, multi-vehicle capability aligns well with the mosaic warfare approach to how air, land, sea, space and cyber domains engage in future conflicts being sought after by the Defense Advanced Projects Research Agency (DARPA). Tim Grayson, director of DARPA’s strategic technology office, outlined the mosaic warfare approach at DARPA’s 60th annual symposium last month.

Grayson described a scenario where a fleet of fighter jets were on a pre-assigned mission to destroy an enemy radar. During that time, a U.S. Army land unit comes across a high-value pop-up target. Today, that unit would need to contact a command-and-control coordination center that would have to manually verify that a fighter available to support the land unit.

But using a system like Synturian, the air and land units of the mission would have a much more advanced form of collaboration available.

“Think of it as distributed autonomy,” said Kratz. “We’re seeing increased interest from DOD in adding more artificial intelligence (AI) to manned and unmanned aircraft, ground vehicles and even submarines. Some of these platforms do not have the processing power to do all of the AI that maybe you want to do in the air. With Synturian, some of that can be pushed to the ground.”

The Army is already using Textron’s Universal Ground Control Station for drone pilots and its One-System Remote Video Terminal, which enables manned-unmanned teaming between Gray Eagle and Shadow drones and manned platforms. Those platforms are good initial targets for Synturian, which has not been fielded yet by DOD or its allies.

Kratz’s unmanned systems engineering team is also researching new concepts for Synturian’s user interface.

“Depending on the operations scenario, the operator doesn’t have ability to always be heads down looking into a tablet or workstation. We’re researching the ability to use the same architecture and swap the user interface paradigm to something that supports augmented reality or even virtual reality in the future,” said Kratz.