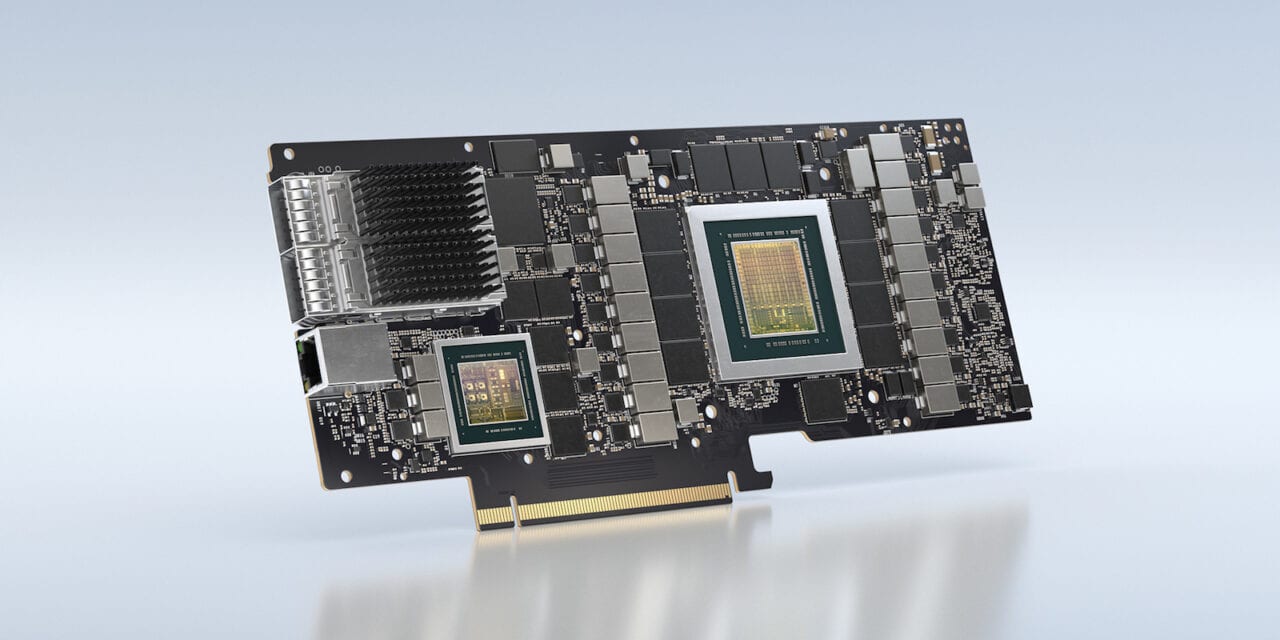

NVIDIA unveiled a new kind of processor, the Bluefield-2 family of data processing units (DPU) during the keynote session of their 2020 GTC virtual event Oct. 5. (NVIDIA)

During the second GPU Technology Conference (GTC) of 2020 done from his kitchen, NVIDIA CEO Jensen Huang on Oct. 5 showed the future of the software-defined data center with all of the infrastructure required to power a traditional data center now able to run on a new kind of processor that the graphics processing unit (GPU) provider calls the “data processing unit,” (DPU).

NVIDIA’s GTC is traditionally periodically held at different locations around the globe, where the company’s leadership and representatives from some of its largest customers explain how GPU technology is enabling current and future advances in artificial intelligence (AI) and machine learning applications for things like self-driving cars, conversational AI, autonomous drone flight control systems and even the use of artificial intelligence to write and program the very algorithms that enable new artificial intelligence and machine learning applications (yes you read that right, AI writing AI).

Despite being confined to his kitchen due to the COVID-19 coronavirus pandemic for the May and October GTC events this year, Huang did not disappoint, using a nine-part keynote series of videos to explain how NVIDIA is enabling a new “age of AI.”

“AI requires a whole reinvention of computing – full-stack rethinking – from chips to systems, algorithms, tools, the ecosystem,” Huang said, standing in front of the stove of his Silicon Valley home.

The technical specifications of NVIDIA’s new Bluefield DPU family. (NVIDIA)

One of the most disruptive advancements revealed by Huang that could have major future potential applications for aerospace and every major technology-driven industry is their new Bluefield-2 DPU, a new family of processing units that are the lynchpin for transitioning from the use of massive physical space and servers to a completely software-defined virtual data center. NVIDIA describes the new Bluefield family of DPUs as capable of delivering a broad set of software-defined networking, storage, security, and management services with up to 200 Gbps Ethernet connectivity.

“What used to be dedicated hardware appliances are now software services running on [central processing units] CPUs. The entire data center is software programmable and can be provisioned as a service. Virtual machines send packets across virtual machines and virtual routers, permitted firewalls are virtualized and can protect every node,” Huang said.

A hypervisor is essentially an intelligent piece of software that allows hardware resources to be abstracted and run as virtual machines, accessing the underlying physical resources of the server, and then sharing those resources with each other. Most hypervisors today work either by being deployed as the first piece of software directly on a server or loaded on top of an existing operating system. Computing resources then pass through the hypervisor before they reach the actual virtual machine, which acts as its own dedicated computing unit with its own dedicated computing resources.

But with Bluefield, NVIDIA is disrupting the use of the hypervisor in this way, and instead providing a dedicated new DPU, associated software development kit and even a new type of data center operating system to accelerate key data center security, networking, and storage tasks, including isolation, root trust, key management, and data compression.

According to Huang, a single Bluefield-2 DPU can deliver the same data center services that could consume up to 125 CPU cores.

“It is a 7 billion transistor marvel, a programmable data center on a chip,” Huang said.

NVIDIA released a three-year roadmap for its new Bluefield DPU. (NVIDIA)

The enablement of a fully software programmable data center by NVIDIA comes following two key recent announcements that will be central to the next-generation data center on-chip concept. On Sept. 13, NVIDIA announced a definitive new agreement to acquire Arm–one of the world’s largest computing chip suppliers–for $40 billion. Additionally, they have a new partnership with VMware, one of the largest providers of cloud computing and virtualization software services.

VMware is the operating system used by 70 percent of the world’s companies and will serve as the operating system for NVIDIA’s new BlueField DPU family.

“Together we’re porting VMware onto Bluefield. Bluefield is the data center infrastructure processor and VMware is the data center infrastructure OS, we will offload virtualization networking storage security onto Bluefield and enable distributed zero-trust security,” Huang said.

The NVIDIA CEO went on to provide a demonstration of how the automobile manufacturer Volvo uses product life cycle management software and computer-aided design today, and how that could be accelerated by the use of a software-defined data center. During the demonstration, a simulated Distributed Denial of Service (DDOS) attack shuts down the Volvo designer running his automobile design program on a CPU, whereas the designer running it on a DPU is completely unaffected.

As more aerospace manufacturing design product lifecycle development processes shift toward the increasing use of simulation and the cloud, the data center on-chip concept could be a boon for accelerating the introduction of new technologies to various segments of the aerospace industry in the near future. The Federal Aviation Administration (FAA) for example, first started transitioning from owning its own data centers to the adoption of cloud storage and networking under a $108 million 10-year contract awarded to CSC Government Solutions in 2015 to take advantage of more cloud computing such as Software As-A-Service, Platform As-A-Service, and Infrastructure As-A-Service.

Additionally, as airports and airlines continue looking at how they can adopt more digital solutions to enable more contactless travel with the use of contact tracing, virtual assistants cloud computing for things like biometrics, the availability of a data center infrastructure on a chip could become increasingly appealing. NVIDIA also recently highlighted how the adoption of its data science workstations by American Airlines has revolutionized the way the international carrier manages and predicts delays and scheduling conflicts for air cargo shipments.

“The data center has become the new unit of computing,” said Huang. “DPUs are an essential element of modern and secure accelerated data centers in which CPUs, GPUs, and DPUs are able to combine into a single computing unit that’s fully programmable, AI-enabled and can deliver levels of security and compute power not previously possible.”