The second joint study published by EASA and Daedalean focuses on key elements of the W-shaped development model proposed for artificial intelligence and machine learning-enabled avionics hardware and software. (EASA)

The European Union Aviation Safety Agency (EASA) and Daedalean published their second joint report Concepts of Design Assurance for Neural Networks (CoDANN) II, on May 21 to explain key machine learning software design, development and verification methods for the use of neural networks in avionics systems.

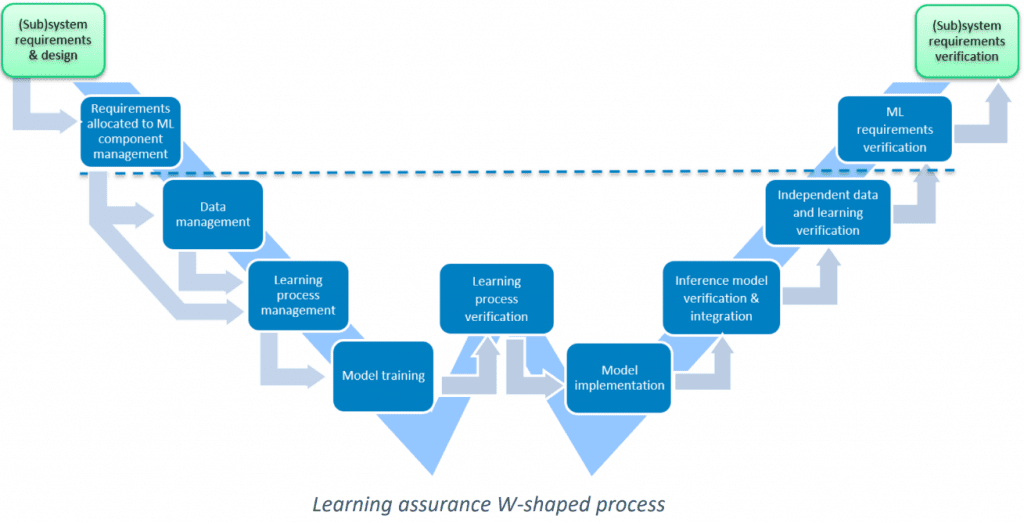

Whereas the 2020 CoDANN I established the baseline understanding that the future use of neural networks in safety-critical avionics applications is technically feasible, CoDANN II places some of the more challenging aspects of the new W-shaped model of development into focus, including the model implementation, inference model verification, and learning verification stages. The 136-page report outlines the implementation and inference parts of the W-shaped development process, defines the role of explainability to the various actors involved in the certification process, and provides a system safety assessment process for integrating neural networks into avionics systems.

Moreover, the learning environment for a neural network is defined in the document as requiring the ability to train the machine learning model based on specific outputs that could include but are not limited to “backpropagation, update of weights, computation of errors.” The inference environment adheres to the operational platform’s requirements, or the safety-critical and real-time performance requirements that the machine learning-enabled system will include.

Daedalean’s visual traffic detection system, consisting of avionics grade cameras and a convolutional neural network in combination with pre and post-processing components helps the system detect images, mainly of aircraft, and determine whether they’re part of cooperative or uncooperative traffic. Using a tracking and filtering mechanism with a weighted value system, the learning assurance and inference verification methods featured in the report ensures to the highest degree of probability that the object detected by the camera is in fact a helicopter, airplane, drone, or other types of aircraft.

EASA and Daedalean worked on the new report as part of an Innovation Partnership Contract (IPC), and its publishing comes following last month’s release of the new concept paper, “First Usable Guidance for Level 1 Machine Learning Applications.” Dr. Luuk van Dijk, CEO and co-founder of Daedalean, told Avionics International in an emailed statement that the release of the guidance last month and the new CoDANNII report are helping to establish the certification baseline for the eventual entry of their neural network visual traffic detection system into service.

The report uses Daedalean’s visual traffic detection system as an example of how a safety critical avionics system could progress through the steps of the W-shaped development model.

“Of course, the last word on obtaining certification is the regulator, not us, but we have at least enough to file a real application for a real system,” van Dijk said. “The First Usable Guidance is groundbreaking in that it sets out the objectives to be met for the first applications. ‘Pilot advisory’ applications may sound like a modest first step but don’t be fooled: these are use cases which carry actual safety risks, so being able to argue that a system like Visual Traffic Detection or Visual Landing Guidance, where failure has real consequences, is safe and fit for purpose actually is a massive step forward for the whole industry.”

Through the identification of design data sets and learning environment mechanisms as well as a number of research groups and initiatives that are advancing industrial-scale understanding of machine learning and neural networks for safety critical applications, the report provides a deep dive into how software development engineers can start thinking about how to prove the learning assurance and interference aspects of designing a machine learning-enabled avionics system. The report also extensively explains the additional steps in the W model that are required for neural networks, most notably, the learning assurance step that must be performed in addition to the hardware and software steps that precede software and hardware verification in the traditional V-shaped model.

“It is important to not treat “certification” as this paper hurdle that stands between you and the market, but that build something that works well and is safe first, and that your own engineering team has tried to critically disprove this before you accept it internally. Now that the regulator has the first means to follow the story along, nothing should stand in the way, but the rubber is about to hit the tarmac,” van Dijk said.

Engineers are also provided with hardware, software, and system considerations for the inference environment, or the actual operational platform that will be used in-flight to facilitate the neural network. Hardware components typically used for inference are classified in the report as commercial-off-the-shelf (COTS) hardware in the form of central processing units (CPUs) or graphics processing units (GPUs). The other category is customizable hardware developed using logic that is integrated into field-programmable gate arrays (FPGs) or application-specific integrated circuits (ASIC).

The report notes that GPUs offer significantly more computing power than off-the-shelf CPUs, especially for the pre and post-processing computations required for the raw output of data generated by a neural network.

“The learning environment resembles a classical software development environment, but unlike compilation of code into a binary, the whole training process influences the output, from data and model implementation on high-level frameworks to training on specialized hardware,” the report says. “Naturally, the inference environment is very similar to classical avionics software and hardware, however, there are particularities due to the specialized hardware that might be required to run neural networks and their massively parallelized operations.”

Using Daedalean’s visual traffic detection system as an example, the report analyzes how the system’s neural network could theoretically follow the W-shaped model’s learning assurance and inference model verification and integration steps to ultimately progress through the full machine learning development cycle.

EASA already used some of the findings from the CoDANNII project in the first usable guidance for Level 1 machine learning applications published last month. The agency is accepting comments on the guidance through June 30, 2021.

“Points of interest for future research activities, standards development, and certification exercises have also been identified,” EASA said in the report. “These will contribute to stimulating EASA efforts towards the introduction of AI/ML technology in aviation.”